Photo by Chris Ried on Unsplash

Machine learning Model to Microservice Part 0: Roadmap

Introduction to 6 part series on converting model code and weights to Microservice

Why a series on converting Model to Microservice?

In the various machine learning courses, the major points of learning are details of how Machine learning or deep learning works and how to train models for certain tasks. In this series of posts, I will explain how to go from having a machine learning model which is pre-trained with model weights in hand to making it into a microservice. The microservice is supposed to perform one function of serving inference for the pre-trained model.

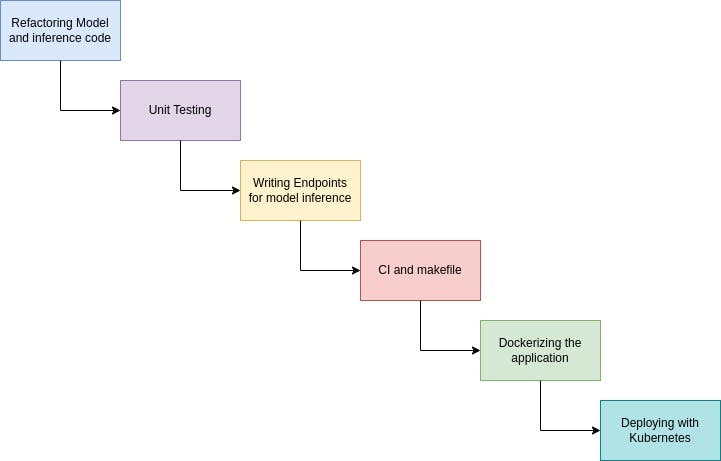

For doing this we will be following the roadmap as in the figure below. This roadmap can be considered as something generic which can be built from basic open-source tools such as python microframeworks such as flask/FastAPI, docker, etc. Instead of depending on a specific tool or platform.

Pre-requisites

- Programming experience with Python (if you know how to write classes and functions you are good to go)

- Ideally, some experience with a deep learning framework would help but this can be followed even without that.

The Roadmap

The posts will be starting from

- Code structure and Quality - Structuring the repository with certain elements like linting, pre-commit,virtualenvs, etc

- Testing - unit testing of Model inference and adding coverage.

- Let us Serve - Writing a web-endpoint with flask or fast-API

- Continuous Integration - Add continuous integration (Jenkins/Github Actions) and Makefile

- Containerization - Dockerizing the Application with the inference as an endpoint

- Deploy and Document - Deploying using Kubernetes and Documentation of the microservice.

If you find this useful I would request you to Like, comment, and share it with other people who might find this interesting.